You’re asking yourself whether a business degree still makes sense when an AI can spit out a full market analysis in seconds? Or law, when ChatGPT can already deliver you legal excerpts, case analyses, and argumentation strategies?

Maybe you’ve asked yourself exactly this question—and you’re not alone. Many students are currently wondering: Is my degree even worth it anymore? Or will I be replaced by AI in just a few years?

In this article, we’ll dig deep into this question. We’ll look at which fields are truly future-proof—and which skills will make you indispensable in an AI-driven world.

1. Should You Still Go to University in the Age of AI?

Let’s start at the beginning: Artificial Intelligence is not just another tool like PowerPoint or a calculator. It’s a real revolution—comparable to the introduction of the internet or even electricity. And it is fundamentally changing how we work, communicate, and learn.

In many industries, AI is already taking over tasks that used to require years of study. Especially the kind of entry-level tasks graduates usually start with.

Writing texts? Claude can.

Generating code? ChatGPT can.

Analyzing legal documents? DeepSeek has no problem with that.

Not perfect yet, of course—but good enough to radically speed up and automate processes.

And that’s exactly why choosing the right degree is more important than ever. The question is no longer: Which job is safe?

But rather: Which skills can you develop that AI won’t easily learn—or where companies would still prefer YOU over an AI?

2. Three things AI (still) can’t do – and what you should study

1. Recognizing relevance – not just processing information

AI is impressive. It can analyze massive datasets, spot patterns, summarize texts, and generate seemingly intelligent answers. If you ask a language model why climate change is an emotional topic, it will give you a plausible explanation—based on countless articles and scientific texts where humans have written exactly that.

A language model recognizes that certain words often appear together. It knows that “climate change,” “injustice,” and “global responsibility” statistically belong together. But it has no personal experiences, no values, no stance. It doesn’t know why people feel anger or fear. It doesn’t understand emotional reality, historical context, or social conflict—it only sees patterns in language.

It can repeat what others have said—but it can’t question or reframe meaning on its own. And that’s your opportunity.

If you study philosophy, sociology, political science, or cultural studies, you’ll learn how meaning is created. Why certain narratives resonate in some cultures but not in others. Why a sentence in one context is trivial and in another explosive. And above all: you’ll learn how to ask the right questions.

Even in a world full of data, numbers, and prompts, one thing remains: we need people who don’t just know what is happening—but who understand why it’s happening and what should happen next. People who take responsibility, who analyze, argue, and reflect. That’s something no AI can do for us.

2. Human connection and empathy

AI can write texts—and often at a very high level. It can sound empathetic, friendly, and understanding. But however convincing it may seem—it’s still just a façade.

AI doesn’t feel. It doesn’t know sadness, uncertainty, or real excitement. It can’t sense when someone is only pretending to be fine. It doesn’t hear tears in a voice, hesitation in a glance, or a gesture that says it all.

And that’s the crucial difference: humans don’t just read words—they read between the lines.

That’s why everything involving genuine human interaction will remain critically important. If you want to work with people—in coaching, education, mental health, or social counseling—you won’t be replaceable by AI. In fact, you’ll be more in demand than ever.

Fields like psychology, social work, education, or communication studies don’t just teach theory. They teach the real skill of listening, building trust, and having conversations that truly change things.

In a world where more and more communication is digitalized, the desire for genuine conversations only grows. For care. For people who truly care. The more technical our world becomes, the more valuable human qualities become.

So if you want to work with people, you don’t need to worry about your future. You’ll matter most exactly where AI can’t go.

3. Innovation doesn’t come from algorithms

AI is excellent at combining things. It analyzes existing information, finds patterns, links data points, and proposes logical, plausible solutions. Impressive—no doubt.

But what AI can’t do is think of something truly new.

It can only rely on what already exists. Its ideas are always based on what humans have already written, said, or programmed. If you ask it for an original idea, it will give you the most probable one—not the boldest.

Real innovation, however, starts when someone thinks the unlikely. When an entrepreneur asks: “What if…?” and suddenly opens up a whole new perspective.

If you want to be the person who develops new solutions for real problems, you don’t just need a degree filled with facts—you need a field where you can create.

Biotech, engineering, environmental science, or physics fit here. They push you to expand existing knowledge and create something new.

But interdisciplinary combinations are becoming just as important: psychology with computer science, business with sustainability, design with social sciences. Companies are looking for people who can build bridges—between technology and society, between humans and machines.

If you want to truly change things, you don’t need to fear AI. You just need the courage to think further than it can. You need the courage to build something of your own.

3. Which degrees could become problematic?

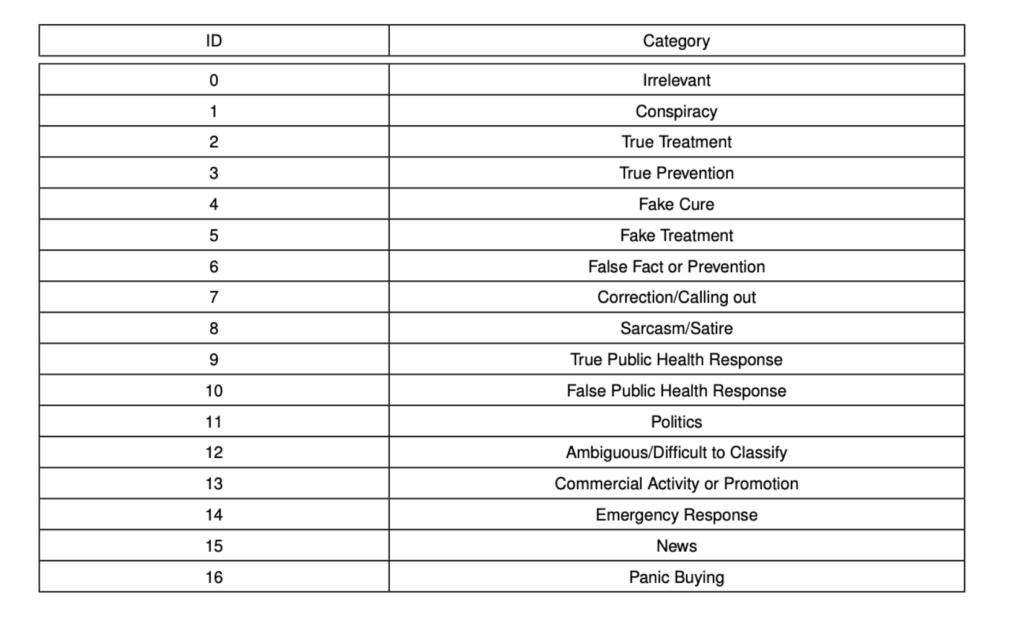

Of course, there are also fields where we have to be honest: the job market they prepare for is under pressure. Especially in areas with highly standardized, rule-based, or purely data-driven tasks, automation is moving fast.

Examples? Traditional accounting. Legal assistance. Simple translation work. Basic programming. Marketing copywriting. AI can already handle these tasks quite reliably today—and it will likely get even better.

But: that doesn’t mean you should completely avoid business or law. It just means you need a unique edge. Something that sets you apart from machines—and makes you irreplaceable.

That could be ethical reflection skills, strategic thinking, interpersonal leadership, or intercultural understanding. For example: if you study law but also understand the societal and moral implications of LegalTech—you’ll have a real advantage in the future.

4. How to adapt your studies wisely

Many companies are openly saying it now: it’s not about what you study, but how you think. Can you solve problems creatively? Can you communicate clearly? Are you willing to keep learning? Can you collaborate effectively with others?

That means soft skills are more important than ever. Skills like creativity, critical thinking, emotional intelligence, and self-organization determine whether you’ll thrive in a rapidly changing job market—or build your own profitable venture.

If you develop these skills during your studies (in any field), you’ll be in a strong position.

Here are some questions to guide your study choices:

- Am I just learning content—or also the systems behind it?

- Can I combine my field with technology, ethics, or social competence?

- Does my program actively use AI as a tool, or does it ignore it?

- Do I get opportunities to work on real problems—in projects, teams, or practice?

- And maybe most important: What do I genuinely enjoy, and where are my talents—even if there’s no job title for it yet?

Because in the future, jobs won’t be as clearly defined as they used to be. What will matter most is whether you can adapt and keep evolving.

Conclusion: Studying in the future means shaping the future

You don’t need to fear being replaced by AI—if you’re willing to work with it. You don’t need to become a “prompt engineer” to stay relevant. But you do need to understand where your real value lies.

Knowledge alone is no longer enough. What matters is the ability to recognize meaning, think critically, build relationships, and create new solutions. And for that, studying is still one of the best paths forward.

So don’t just ask yourself what you want to study. Ask yourself: What do I want to study for? And: How can I use my skills meaningfully in a world full of AI?